Saturday July 28

“Can We Have a Group Test?” Designing Collaborative, Active, Alternative Assessments for Physics Classes (Kelly O’Shea, Danny Doucette)

On Saturday, I attended Kelly O’Shea’s and Danny Doucette’s all-day workshop on lab practicums. All of their slides and handouts are here: AAPT Collaborative Group Practicum Exams Workshop.

For the first half of the day, Kelly and Danny had us in “student mode” as we performed several of the practicums in groups and then shared our experiences with the rest of the participants.

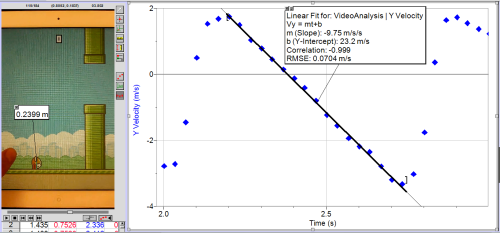

There were two rounds of practicums. Round 1 had practicums on friction, kinematics, and energy. Round 2 had practicums on calorimetry, internal resistance, two-slit diffraction, and rolling projectiles.

After lunch was dedicated “work time” where we had time to work on developing new practicums of our own, either alone or with others. One group of participants, led by Val Monticue, developed a generalized grading rubric for practicums. I worked on two things: (1) designing a new practicum with several other teachers that ties together energy, momentum, and friction; (2) designing “leveled practicums” with Jenn Broekman.

The set-up for the new practicum is an adjustable pendulum with a 100-gram bob that swings down and strikes a 100-gram tissue box (mostly empty), which causes the tissue box to slide across the table. The practicum has 2 parts: (1) Vary the release height of the pendulum so that the pendulum comes to rest upon impact with the tissue box. (2) Using the data from the first part, predict the necessary release point of the pendulum so that the tissue box slides a given distance (preferably so the the box reaches the edge of the table, but does not slide off).

A lot of time was spent figuring out what size/mass the pendulum and box had to be so that the box slid a decent distance when struck by the pendulum. At first, we tried a small block several centimeters thick. The block had tendency to spin when struck, and sometimes the pendulum swung over the block. Then we tried a larger wood block. While it was tall enough that the pendulum didn’t swing over the block after impact, it didn’t slide very far due to its increased mass. Finally, we discovered that a tissue box worked really well — tall and light. Coincidentally, the mass of the mostly empty tissue box and the pendulum bob were the same (100 grams).

Once we got the set-up working, we put the practicum to the test to see if it would actually work. First we found the “sweet spot” when the pendulum comes to rest after striking the tissue box. Based on the release height of the pendulum and the distance the tissue box slides after impact, would could calculate (1) the speed of the pendulum right before hitting the box; (2) the speed of the box immediately after being hit; (3) the coefficient of friction between the box and the table; (4) the percentage of kinetic energy lost in the collision. Assuming the percentage of kinetic energy lost is the same in all collisions between the pendulum and the box, we calculated the pendulum release height needed for the box to slide 1 meter. Our predicted height (~40 cm, if I recall correctly), was much larger than the actual height needed (~80 cm). So while it didn’t work, we think that the practicum was still fun and challenging and tied together lots of different topics. Having students reflect on their assumptions and explain why the actual height is larger than the predicted height would be good, too.

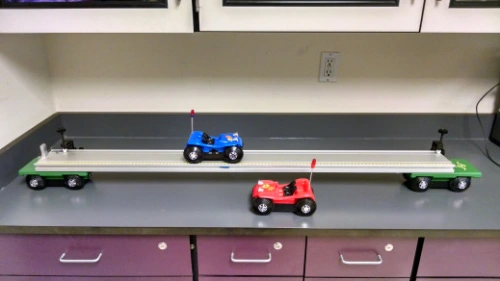

For the remainder of the work time, Jenn Broekman and I turned the traditional constant velocity lab practicum into a “leveled” practicum. We got the idea from Kelly O’Shea that day, who suggested redesigning practicums so that all students could feel successful. Jenn and I broke the buggy practicum into 3 levels (easier, regular, more challenging). Here’s what we came up with:

We think these could be deployed in several ways. One option is for all students to start with Level 1 and work through as many as they can in the time allotted. Hopefully by the end of class, all groups will have completed at least the Level 1 task. One drawback to this deployment is that it’s possible that some groups that would be successful with Level 3 would never get a chance to try it because they spent most of class time working on Levels 1 and 2. So another deployment possibility is for students to chose the level of task they feel most comfortable with first. In this case, a group could start with Level 3 and spend all class period working on it and be successful in the end, while another group might need all period for Level 1, and another group might start with Level 1 and then move on to Level 2.

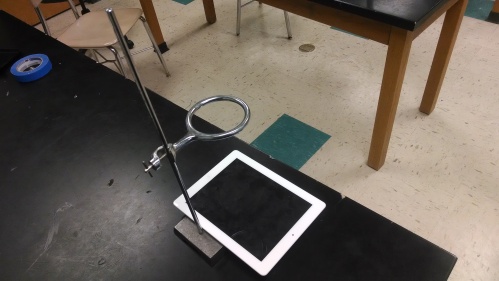

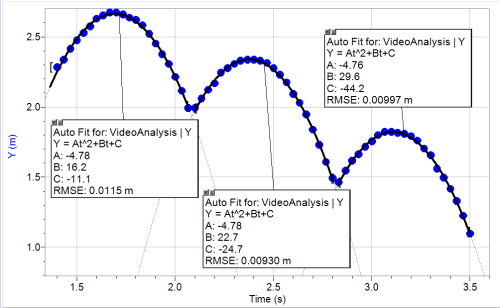

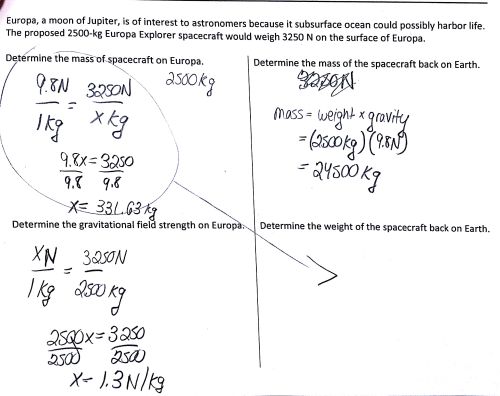

After work time, everyone shared what they worked on. Here’s a practicum developed by another group. It’s about electromagnetic induction:

After the workshop, I walked back to my hotel, which took me past the White House. There were several protesters there, and a person dancing and wearing a Trump mask.

More updates about the rest of the conference in future posts!